A Biologically Inspired Scale-space for Illumination Invariant Feature Detection

Measurement Science and Technology 2013

|

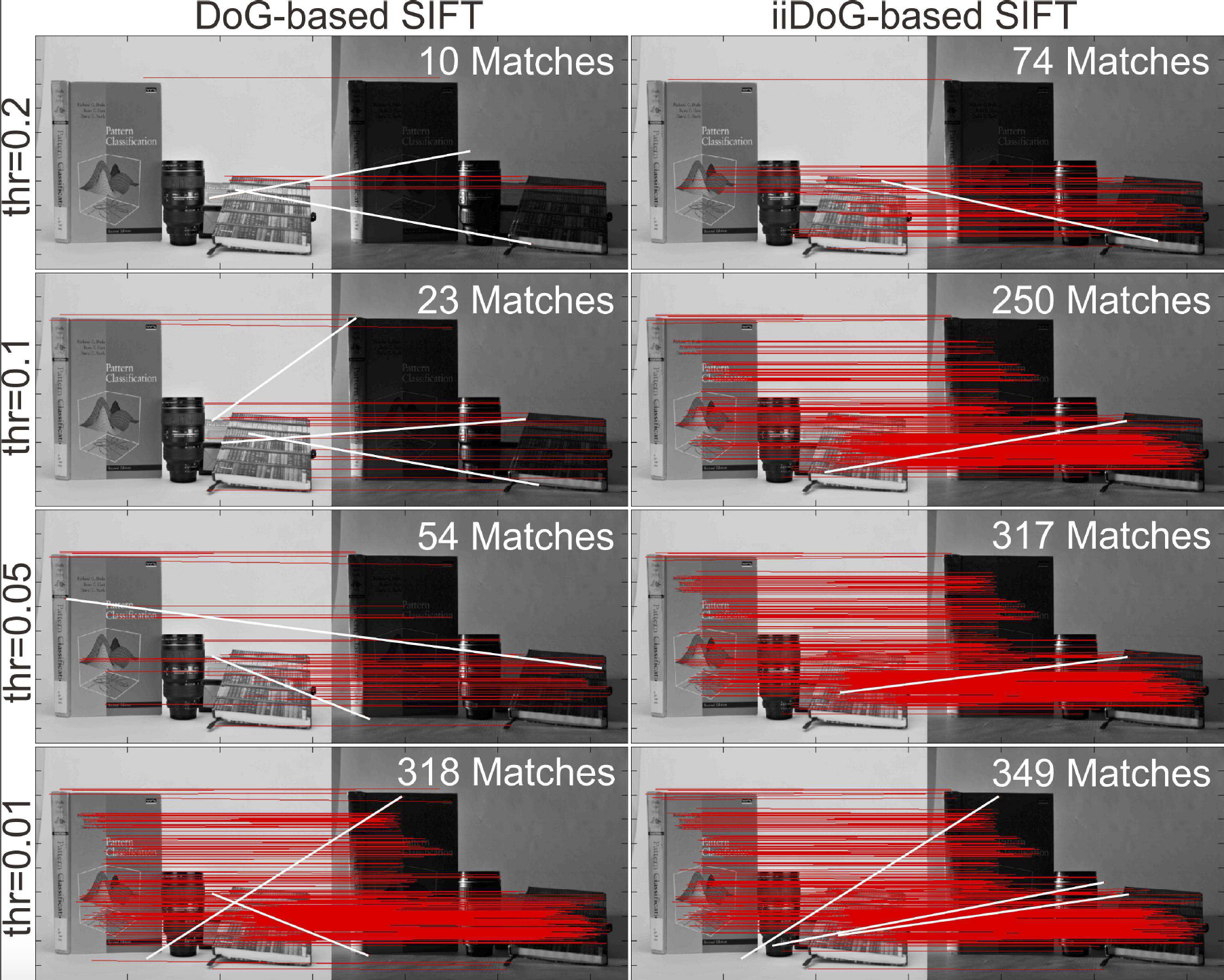

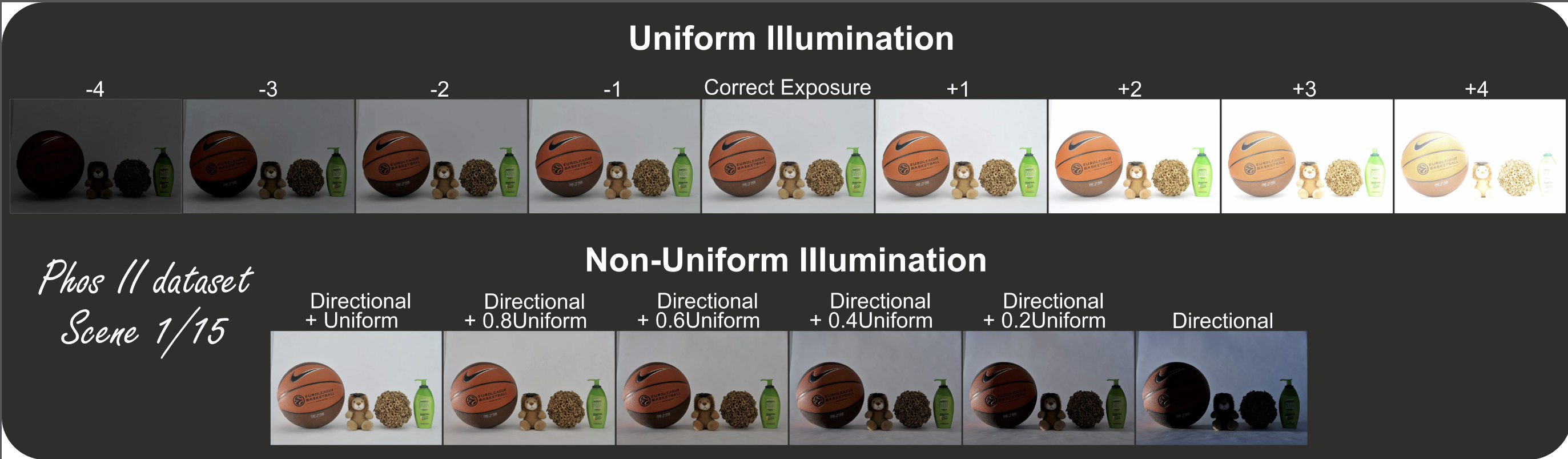

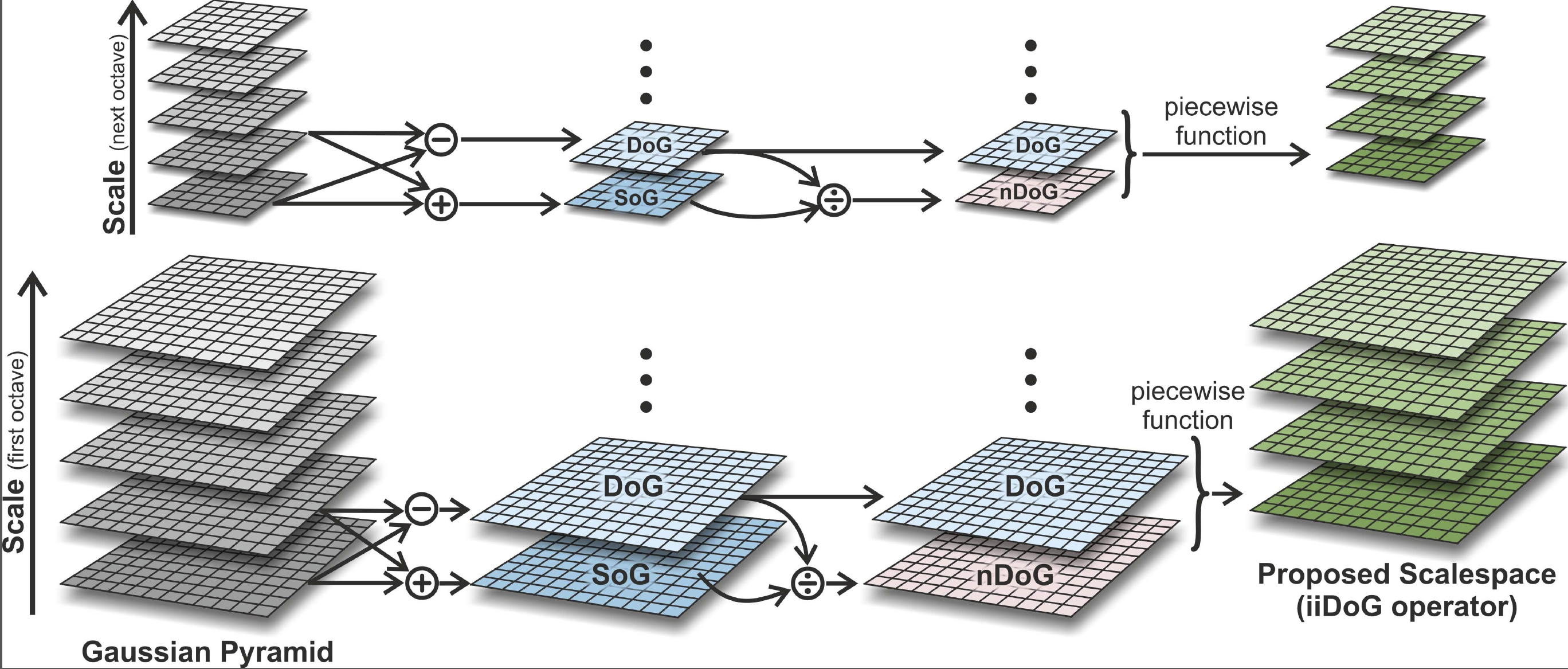

This paper presents a new illumination invariant operator, combining the nonlinear characteristics of biological center-surround cells with the classic difference of Gaussians operator. It specifically targets the underexposed image regions, exhibiting increased sensitivity to low contrast, while not affecting performance in the correctly exposed ones. The proposed operator can be used to create a scale-space, which in turn can be a part of a SIFT-based detector module. The main advantage of this illumination invariant scale-space is that, using just one global threshold, keypoints can be detected in both dark and bright image regions. In order to evaluate the degree of illumination invariance that the proposed, as well as other, existing, operators exhibit, a new benchmark dataset is introduced. It features a greater variety of imaging conditions, compared to existing databases, containing real scenes under various degrees and combinations of uniform and non-uniform illumination. Experimental results show that the proposed detector extracts a greater number of features, with a high level of repeatability, compared to other approaches, for both uniform and non-uniform illumination. This, along with its simple implementation, renders the proposed feature detector particularly appropriate for outdoor vision systems, working in environments under uncontrolled illumination conditions.

Contributions

- We introduce a new DoG-based operator, inspired by the center-surround cells of the human visual system (HVS), which exhibits improved illumination invariant characteristics, compared to classic DoG. This operator can be used for the creation of an illumination invariant scale-space, which can improve scale-space-based local detectors, like SIFT, by increasing their robustness in various kinds of illumination changes.

- We introduce a new dataset specifically targeted to evaluate the illumination invariance of vision systems. Unlike existing datasets, the proposed is the only one featuring scenes under various degrees and combinations of uniform and non-uniform illumination. As a result, to the best of our knowledge, it constitutes the only existing dataset that can provide clues on how the performance of algorithms may vary according to different illuminations and imaging conditions.

Results